Confidential Computing Meets NVIDIA HGXTM B200: Secure AI Without the Performance Trade-Off

Corvex completes full confidential computing deployment combining state-of-the-art security with near-native performance

As AI systems move deeper into regulated, high-value, and privacy-sensitive domains, securing data while it is being processed has become an operational requirement. Encrypting data at rest and in transit is no longer sufficient when model weights, intermediate activations, and sensitive inputs must reside in memory during training and inference.

This is the gap confidential computing (CC) was designed to solve.

Corvex has completed a full confidential computing deployment utilizing NVIDIA HGXTM B200 GPU systems servers, confirmed encrypted NVSwitch / NVLink fabric, and successfully performed remote attestation of both CPU and GPU using Intel Trust Authority (ITA).

The result is a production-ready NVIDIA HGX B200 confidential computing platform that delivers strong, hardware-enforced data protection with near-native performance.

Confidential Computing Defined: Protecting Data While It’s in Use

Traditional security models focus on encrypting data at rest and in transit. For AI workloads, however, the most sensitive exposure occurs during execution, when data is actively processed in memory by CPUs and GPUs.

Confidential computing closes this gap by using hardware-based Trusted Execution Environments (TEEs) to ensure that:

- Data and model weights remain protected during execution

- Memory contents are isolated from the host OS and hypervisor

- Infrastructure operators cannot access workloads, even with privileged access

- Workloads can be cryptographically verified before execution via remote attestation

For AI training, inference, and other sensitive workloads (e.g. proprietary models, regulated data, or valuable IP), this enables a zero-trust execution model where workloads can run without assuming trust in the underlying infrastructure.

Deploying Secure AI Computing at Scale

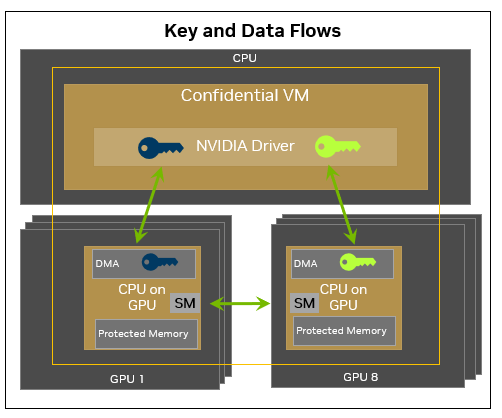

NVIDIA’s Blackwell architecture introduces a material shift in what confidential computing can support at scale.

Earlier GPU generations enabled memory encryption but left gaps for multi-GPU workloads. On prior platforms, GPU-to-GPU communication over NVLink and NVSwitch was not encrypted, limiting the viability of confidential computing for large models that rely on distributed execution.

With the latest NVIDIA HGX B200 firmware release, that limitation is removed. The firmware update unlocked the potential for data to remain protected not only inside the GPU, but also while moving between GPUs in multi-GPU, multi-socket systems.

Corvex’s deployment confirms support for:

- NVIDIA Confidential Computing on NVIDIA Blackwell systems

- Encrypted NVSwitch / NVLink communication between GPUs

- TEE-I/O, extending protection beyond the CPU boundary

- Joint CPU + GPU remote attestation via ITA

How NVIDIA HGX B200 Secures Workloads without Performance Penalties

Earlier generations of Confidential may have introduced minor performance impacts while running I/O-heavy inference workloads.

The NVIDIA HGX B200 has changed that equation.

Corvex’s deployment of NVIDIA HGX B200 with confidential computing (including Intel TDX and NVIDIA Confidential Computing) saw near-native performance, representing a major leap forward for secure AI: NVIDIA Confidential Computing on NVIDIA Blackwell delivers nearly identical throughput performance compared to unencrypted modes (NVIDIA).

The key insight is that confidential computing overhead diminishes as workload size and model complexity increase. For production-scale models such as Llama-3.1-70B, overall performance is dominated by GPU compute and memory throughput, reducing encryption costs to almost zero.

NVIDIA Blackwell addresses the remaining sources of overhead by introducing encryption engines purpose-built for AI workloads, rather than repurposed general-purpose logic. In addition, TEE-I/O extends protection across NVLink and NVSwitch fabrics, eliminating the PCIe I/O bottlenecks that constrained previous generations under confidential execution. Encrypted HBM access is also highly optimized and hardware-accelerated, allowing memory-intensive workloads to run with minimal performance impact. As a result, NVIDIA HGX B200 preserves its roughly 2× training and 2.5× inference performance advantage over NVIDIA HGX H200, even with confidential computing fully enabled.

What This Means for Customers

For customers running large language models or sustained training jobs, security no longer requires sacrificing throughput.

Enterprise AI with full data encryption and privacy guarantees

- Enterprise AI with full data encryption and privacy guarantees

- No meaningful performance sacrifice

- Secure multi-tenant AI becomes viable at scale

- Confidential AI moves from “experimental” to production-ready

Remote Attestation: Verifiable Trust by Design

Confidential computing is only meaningful if it can be verified.

That’s why remote attestation is a foundational requirement for confidential computing: it provides cryptographic proof that hardware, firmware, drivers, and system configuration are in a known, untampered state before sensitive workloads are allowed to run.

With NVIDIA HGX B200, Corvex supports joint CPU and GPU attestation, which allows customers to confirm that:

- CPU trusted execution environments are active and uncompromised,

- GPUs are operating in confidential computing mode, and

- The execution chain matches expected configurations before sensitive data is introduced.

This enables verifiable trust. Customers can independently validate system state and rely on evidence rather than assumptions, enabling auditability, compliance, and zero-trust deployment models.

Practical Use Cases Enabled Today

This architecture unlocks confidential AI use cases that were previously impractical at scale, including:

- Collaborative healthcare and life sciences research

Multiple institutions can contribute encrypted datasets to shared training environments without exposing raw patient data, while independently verifying system integrity.

- Confidential enterprise inference

Proprietary models, prompts, and outputs remain protected during runtime, even in shared or hosted environments.

- Secure multi-tenant AI platforms

Strong hardware isolation reduces trust assumptions between tenants and operators, supporting enterprise SaaS and internal AI platforms.

- Sovereign and regulated workloads

Public-sector and critical infrastructure deployments gain cryptographic assurance that sensitive data cannot be accessed by infrastructure operators.

Confidential AI as an Infrastructure Property

At Corvex, confidential computing is treated as a foundational infrastructure capability, not an application-level feature. NVIDIA HGX B200 systems are deployed with confidential computing enabled end to end, including encrypted NVLink and NVSwitch communication, TEE-I/O, and coordinated CPU-GPU attestation.

At production scale, these protections introduce minimal performance overhead, allowing secure workloads to operate predictably and efficiently. The result is a practical foundation for confidential AI across regulated and IP-sensitive environments.

Closing Perspective

Confidential computing has reached a meaningful inflection point with the NVIDIA HGX B200. Customers now have the ability to secure multi-GPU AI workloads without compromising scale or performance while enabling encryption across the NVSwitch and full CPU and GPU, with the added ability to cryptographically verify the integrity of the trusted execution environment.

Corvex is proud to be among the first to deliver this capability in a production-ready environment, enabling customers to deploy the most demanding AI workloads with confidence, privacy, and performance.

Confidential AI is no longer experimental or impractical. It is operational, verifiable, and ready for real workloads.

Contact us for more information or a product demonstration.

.png)

-p-500%201.png)

.svg)

.svg)